Start » Filter Reference » Computer Vision » Template Matching » LocateSingleObject_NCC

| Module: | MatchingBasic |

|---|

Finds a single occurrence of a predefined template on an image by analysing the normalized correlation between pixel values.

Applications

| Name | Type | Range | Description | |

|---|---|---|---|---|

|

inImage | Image | Image on which object occurrence will be searched | |

|

inSearchRegion | ShapeRegion* | Range of possible object centers | |

|

inSearchRegionAlignment | CoordinateSystem2D* | Adjusts the region of interest to the position of the inspected object | |

|

inGrayModel | GrayModel | Model of objects to be searched | |

|

inMinPyramidLevel | Integer | 0 - 12 | Defines the lowest pyramid level at which object position is still refined |

|

inMaxPyramidLevel | Integer* | 0 - 12 | Defines the total number of reduced resolution levels that can be used to speed up computations |

|

inIgnoreBoundaryObjects | Bool | Flag indicating whether objects crossing image boundary should be ignored or not | |

|

inMinScore | Real | -1.0 - 1.0 | Minimum score of object candidates accepted at each pyramid level |

|

inMaxBrightnessRatio | Real* | 1.0 -  |

Defines the maximal deviation of the mean brightness of the model object and the object present in the image |

|

inMaxContrastRatio | Real* | 1.0 -  |

Defines the maximal deviation of the brightness standard deviation of the model object and the object present in the image |

|

outObject | Object2D? | Found object | |

|

outPyramidHeight | Integer | Highest pyramid level used to speed up computations | |

|

outAlignedSearchRegion | ShapeRegion | Transformed input shape region | |

|

diagImagePyramid | ImageArray | Pyramid of iteratively downsampled input image | |

|

diagMatchPyramid | ImageArray | Candidate object locations found at each pyramid level | |

|

diagScores | RealArray? | Scores of the found object at each pyramid level | |

Description

The operation matches the object model, inGrayModel, against the input image, inImage. The inSearchRegion region restricts the search area so that only in this region the centers of the objects can be presented. The inMinScore parameter determines the minimum score of the valid object occurrence.

The computation time of the filter depends on the size of the model, the sizes of inImage and inSearchRegion, but also on the value of inMinScore. This parameter is a score threshold. Based on its value some partial computation can be sufficient to reject some locations as valid object instances. Moreover, the pyramid of the images is used. Thus, only the highest pyramid level is searched exhaustively, and potential candidates are later validated at lower levels. The inMinPyramidLevel parameter determines the lowest pyramid level used to validate such candidates. Setting this parameter to a value greater than 0 may speed up the computation significantly, especially for higher resolution images. However, the accuracy of the found object occurrences can be reduced. Larger inMinScore generates less potential candidates on the highest level to verify on lower levels. It should be noted that some valid occurrences with score above this score threshold can be missed. On higher levels score can be slightly lower than on lower levels. Thus, some valid object occurrences which on the lowest level would be deemed to be valid object instances can be incorrectly missed on some higher level. The diagMatchPyramid output represents all potential candidates recognized on each pyramid level and can be helpful during the difficult process of the proper parameter setting.

To be able to locate objects which are partially outside the image, the filter assumes that there are only black pixels beyond the image border.

The outObject.Point contains the model reference point of the matched object occurrence. The outObject.Angle contains the rotation angle of the object. The outObject.Match provides information about both the position and the angle of the found match combined into value of Rectangle2D type. The outObject.Alignment contains information about the transform required for geometrical objects defined in the context of template image to be transformed into object in the context of outObject.Match position. This value can be later used e.g. by 1D Edge Detection or Shape Fitting categories filters.

Hints

- Connect the inImage input with the image you want to find objects on. Make sure that this image is available (the program was previously run).

- Click the "..." button at the inGrayModel input to open the GUI for creating template matching models.

- The template region you select on the first screen should contain characteristic pixel patterns of the object, but should not be too big.

- Make sure that the inMinAngle / inMaxAngle range corresponds to the expected range of object rotations.

- If you expect the object to appear at different scales, modify the inMinScale and inMaxScale parameters. Use it with care as it affects the speed.

- Do not use simultaneously wide ranges of rotations and scaling as the resulting model may become extremely large.

- On the second page (after clicking "Next") define the object rectangle that you want to get as the results. Please note that the rotation of this rectangle defines the object rotation, which is constrained by the inMinAngle / inMaxAngle parameters.

- If an object is not detected, first try decreasing inMaxPyramidLevel, then try decreasing inMinScore. However, if you need to lower inMinScore below 0.5, then probably something else is wrong.

- If all the expected objects are correctly detected, try achieving higher performance by increasing inMaxPyramidLevel and inMinScore.

- Please note, that due to the pyramid strategy used for speeding-up computations, some objects whose score would finally be above inMinScore may be not found. This may be surprising, but this is by design. The reason is that the minimum value is used at different pyramid levels and many objects exhibit lower score at higher pyramid levels. They get discarded before they can be evaluated at the lowest pyramid level. Decrease inMinScore experimentally until all objects are found.

- If precision of matching is not very important, you can also gain some performance by increasing inMinPyramidLevel.

- If the performance is still not satisfactory, go back to model definition and try reducing the range of rotations and scaling as well as the precision-related parameters: inAnglePrecision and inScalePrecision.

- Define inSearchRegion to limit possible object locations and speed-up computations. Please note, that this is the region where the outObject.Point results belong to (and NOT the region within which the entire object has to be contained).

- Smoothing of the input image can significantly improve performance of this tool.

Examples

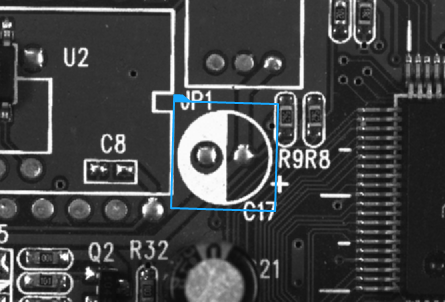

Locating single object with the gray-based method (inMaxPyramidLevel = 4).

Remarks

Read more about Local Coordinate Systems in Machine Vision Guide: Local Coordinate Systems.

More informations about creating models can be found in article: Creating Models for Template Matching.Additional information about Template Matching can be found in Machine Vision Guide: Template Matching

Hardware Acceleration

This operation is optimized for SSE2 technology for pixels of type: UINT8.

This operation is optimized for AVX2 technology for pixels of type: UINT8.

This operation is optimized for NEON technology for pixels of type: UINT8.

This operation supports automatic parallelization for multicore and multiprocessor systems.

Complexity Level

This filter is available on Basic Complexity Level.

Filter Group

This filter is member of LocateObjects_NCC filter group.

See Also

- LocateMultipleObjects_NCC – Finds all occurrences of a predefined template on an image by analysing the normalized correlation between pixel values.

- CreateGrayModel – Creates a model for NCC or SAD template matching.

- LocateSingleObject_SAD – Finds a single occurrence of a predefined template on an image by analysing the Square Average Difference between pixel values.

Basic

Basic